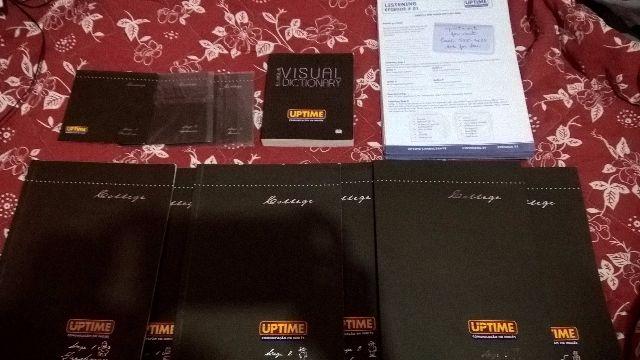

Apostila Ingles Uptime

Intro is a network daemon that runs on the platform and listens for statistics, like counters and timers. Packets are then sent to one or more pluggable backend services. The default service is. Every 10 seconds the stats sent to StatsD are aggregated and forwarded on to this backend service. It can be useful to see what stats are going through both sides of the connection – from the client to StatsD and then from StatsD to Graphite. Management Interface The first thing to know is there is a simple management interface built in that you can interact with.

Pimsleur ingles apostila pdf. In this case you can remove the files manually from the places indicated in the Read-Me file. System Uptime 6 53 26 PM 2 hours ago RP1428 1 21 2009 8 30 48 PM.

By using either telnet or you can find information directly from the command line. By default this is listening on port 8126, but that is configurable in StatsD. The simplest thing to do is send the stats command:% echo 'stats' nc statsd.domain.com 8126 uptime: 365 messages.lastmsgseen: 0 messages.badlinesseen: 0 graphite.lastflush: 5 graphite.lastexception: 365 This tells us a bit about the current state of the server, including the uptime, and the last time a flush was sent to the backend. Our server has only been running for 365 seconds.

Apostila Ingles Uptime Pdf

It also lets us know when the length of time since StatsD received its last message, bad lines sent to it, and the last exception. Things look pretty normal. You can also get a dump of the current timers: (echo 'timers' nc statsd.domain.com 8126) timers As well as a dump of the current counters: (echo 'counters' nc statsd.domain.com 8126) counters Take a look at the files generated to get an idea of the metrics StatsD is currently holding.

On the Wire Beyond that, its fairly simple to debug certain StatsD or Graphite issues by looking at whats going on in realtime on the connection itself. On the StatsD host, be sure you’re looking at traffic across the default StatsD listen port (8125), and specifically here I’m grep’ing for the stat that I’m about to send which will be called test.goulah.myservice:% sudo tcpdump -t -A -s0 dst port 8125 grep goulah listening on eth0, link-type EN10MB (Ethernet), capture size 65535 bytes Then we fake a simple client on the command line to send a sample statistic to StatsD like so: echo 'test.goulah.myservice:1 c' nc -w 1 -u statsd.domain.com 8125 Back on the StatsD host, you can see the metric come through: e.' A.test.goulah.myservice:1 c There is also the line of communication from StatsD to the Graphite host. Every 10 seconds it flushes its metrics. Start up another tcpdump command, this time on port 2003, which is the port carbon is listening on the Graphite side:% sudo tcpdump -t -A -s0 dst port 2003 grep goulah listening on eth0, link-type EN10MB (Ethernet), capture size 65535 bytes Every 10 seconds you should see a bunch of stats go. This is what you are flushing into the Graphite backend. In our case I’m doing a grep for goulah, and showing the data aggregated for the metric we sent earlier.

Notice there are two metrics here that look slightly different than the metric we sent though. StatsD sends two lines for every metric. The first is the aggregated metric prefixed with the stats namespace. StatsD also sends the raw data prefixed by statscounts.

This is the difference in the value per second calculated and the raw value. In our case they identical: stats.test.goulah.myservice 0 statscounts.test.goulah.myservice 0 Conclusion Now we can get a better understanding of what StatsD is doing under the covers on our system. If metrics don’t show up on the Graphite side it helps to break things into digestible pieces to understand where the problem lies.

If the metrics aren’t even getting to StatsD, then of course they can’t make it to Graphite. Or perhaps they are getting to StatsD but you are not seeing the metrics you would expect when you look at the graphs. This is a good start on digging into those types of problems.

Customer Presentation - Denver Water. 1. Copyright © 2014 Splunk Inc.